Generative AI and Changes to Knowledge Work

1 Introduction

Rapid progress in the development of AI systems has – once again – inspired speculations about the future of work. Unlike in prior periods of rapid automation, however, uncertainties regarding the impact of technology do not concern repetitive tasks of manual labor but knowledge work (Brynjolfsson et al., 2023; Dell’Acqua et al., 2023). The predictions of the much-acclaimed book The Second Machine Age (2014) are seemingly becoming real. In this book, Eric Brynjolfsson and Andrew McAfee argue that artificial intelligence (AI) technologies will have a comparable effect on cognitive fields of work as industrial automation had on blue-collar work. As in many other interpretations, the perspective is one of a “race against the machine,” the title of another work of these authors (Brynjolfsson & McAfee, 2012, see also Acemoglu & Restrepo, 2018).

Whereas only 10 years ago such statements seemed to address a distant future, they appear to become true in the present with the advent of generative AI (genAI), as this incarnation of AI progresses into fields so far thought to be the exclusive realm of human action. GenAI applications seem to possess skills that surpass those of humans, processing and generating much more information instantly. They also seem to possess creativity as they can generate poems, create graphics, or compose music. In addition, the results they produce appear authentic in the sense that it is difficult to judge whether they result from human intelligence or AI (Mei et al., 2024).

The presentation of ChatGPT by OpenAI was aptly described as the “iPhone moment” of AI by the chief executive producer of the chip producer Nvidia due to the degree of public attention it stirred and its extraordinarily fast adoption by consumers. A new practical dimension entered the hitherto rather speculative discourse about AI as millions of users began to experiment with ChatGPT and shared their experiences on social media, reporting both ridiculous failures and astonishing accomplishments (e.g., Korkmaz et al., 2023; Taecharungroj, 2023).

Despite the widespread accessibility of ChatGPT for experimentation, the discourse about it lacks an empirical foundation. Expectations and speculations are rising to new peaks with the public excitement that follows each release of a new genAI model. On the one hand, much of the discussion follows the established patterns of a race between men and machines. A study by Goldman Sachs projects a “significant disruption” of labor markets and calculates that up to a quarter of all jobs might be substituted by genAI-based automation (Hatzius et al., 2023).

On the other hand, studies test the potential of the “augmentation” of human work through genAI in an experimental setting. An experiment on the use of ChatGPT for support in mid-level professional writing tasks found an increase in work productivity and a decrease in inequality between workers because ChatGPT benefits “low-ability workers” more (Noy & Zhang, 2023). A similar experimental investigation into the impact of ChatGPT on customer support work reported significant improvement for novice and low-skilled workers but a minimal impact on experienced and highly skilled workers (Brynjolfsson et al., 2023). Further, the usefulness and effective application of ChatGPT to rather complex tasks have been experimentally tested repeatedly (e.g., for coding tasks, Liu et al., 2024; for idea generation, Haase & Hanel, 2023; for natural language processing, Alawida et al., 2023).

Although such studies help in formulating hypotheses about the impact of genAI in the workplace, their empirical scope remains very limited, particularly when it comes to assessing real changes in work routines and the actual integration of ChatGPT beyond speculations and experimental settings. Systematic research about the actual effect of genAI on professions is still in its infancy. In this paper, we set out to explore the question of how genAI is currently perceived and used in knowledge work. Based on qualitative expert interviews and a quantitative survey, we investigate the perception and use of genAI in three fields of knowledge work that are likely to be particularly exposed: information technology (IT) programming, academic work in science, and coaching. Focusing on professional skills, creativity, and authenticity as crucial aspects of knowledge work, we ask how genAI might change the professional core of this work.

Our results belie the expectation that human expertise and skills lose importance with the introduction of genAI: on the contrary, debates about and experiences with genAI help clarify and revalue the core of a profession’s identity. Our study thus highlights that professions consist of more than the sum of single work tasks and contain experiential and tacit knowledge about how to frame, prepare, and interpret work steps, which are difficult for machines to replicate. However, there are concerns that professions could be hollowed out and, especially, that the quality of products and services could deteriorate as automated “good-enough-versions” of former offers become commonplace.

The remainder of this contribution is structured as follows. In the next section, we briefly introduce the framework through which we approach the relationship between humans and genAI, which connects insights from critical informatics with a theoretical perspective emphasizing the complementarity between technology and human work. In Section 3, we present the methods we used for gathering and analyzing our data. In Section 4, we describe the results concerning the impact of genAI in terms of skills, creativity, and authenticity in IT programming, science, and coaching. In the last section, we discuss the results comparatively and draw conclusions to develop an understanding of the impact of genAI on knowledge work.

2 Research Framework and State of the Art

To approach the impact of genAI on knowledge work, we connected two theoretical threads that caution against oversimplified expectations regarding the substitution of work by technology. The first are perspectives in critical informatics pioneered by Joseph Weizenbaum and further developed by academics like Phil Agre (1998), Lena Bonsiepen and Wolfgang Coy (1989, 1994), Robert Kling (1991, 2002), and Abbe Moshowitz (1981). Weizenbaum, who invented one of the first chatbots, ELIZA, became an outspoken critic of exaggerated expectations of AI after examining the effects of his chatbot’s use. He was especially critical of the common anthropomorphization of technology that is inherent in the term “artificial intelligence.” In Computer Power and Human Reason (1976), Weizenbaum argued that human intelligence was categorically different from automated calculations: AI surpasses human intelligence in many aspects, but it lacks the intuition, context-sensitivity, and reflectivity of human reasoning. Similarly, Robert Kling (1991) cautioned against overly optimistic expectations of AI, finding technology development to be rather oriented towards technological solutionism (an overreliance on the capabilities of technology to solve social problems) than addressing the underlying causes of social problems. In particular, the question of how to adequately mirror, represent, and formalize social processes within technological systems as a precondition for supporting them was regarded as a crucial but still relatively neglected question in IT design (Agre, 1998; Bowker & Star, 1999). These works prepared the ground for current research on ethics in AI development, which takes a critical stance regarding how current technologies interfere with the domains in which they are applied (Mühlhoff, 2025).

The work of Weizenbaum and other critical computer scientists intersects with a second stream of literature that we build on in this study. These contributions highlight the complementarity of artificial and human intelligence and AI’s potential to augment human decision-making (Daugherty & Wilson, 2018; Davenport, 2018; Ramge, 2020). From this perspective, decision-making comprises various functions that involve data collection, analysis, and interpretation. Machines can be instrumental in supporting these functions and open up new possibilities for better-informed decisions. However, it remains the responsibility of humans to dynamically contextualize and interpret material, which requires domain-specific and experiential knowledge that is often difficult to codify (Autor, 2015).

These insights into the differences between human intelligence and AI, on the one hand, and the possibilities for their interaction and the augmentation of human intelligence, on the other hand, are important cornerstones for the analysis of the use of genAI in knowledge work. However, while they remain valid for many conventional automation technologies, the specificity of genAI, and especially genAI chatbots, lies in the dialogical way in which their potential unfolds. These tools are not a static set of machinery or algorithms that can be isolated from human interference, but a technology that evolves in interaction with human activity and, therefore, also transforms work processes and knowledge requirements or resources. Consequently, looking at the iterative and dynamic relationship between humans and genAI has become necessary to analyze and understand possible changes in knowledge work (Schulz-Schaeffer 2025).

A growing body of research has begun to quantify the capabilities and potential applications of genAI tools in professional contexts (e.g., Adam et al., 2024; Memmert et al., 2024). For instance, studies have shown that the use of ChatGPT can significantly increase productivity in professional writing tasks, with one study reporting a 40% decrease in the average time taken to complete tasks and an 18% improvement in output quality. Using these tools also appeared to reduce worker inequality, benefiting participants with weaker skills the most (Noy & Zhang, 2023). Other studies demonstrated genAI’s potential for efficient task support in experimental settings (e.g., for code generation, Liu et al., 2024; for emergency triage, Kim & Im, 2023; for college essay writing, Tossell et al., 2024) and predict substantial job transformations and task automation (e.g., for teacher roles, Yu, 2024; for advertising, Osadchaya et al., 2024).

These studies highlight the potential effects of genAI use on productivity, but their findings mostly rely on experimental settings that focus on the execution of single tasks. As a result, they neither allow an understanding of how genAI is introduced into complex everyday work situations. In knowledge professions, this process is often characterized by various contingencies related to orientation, learning, priority readjustment, and strategies for the use of technologies (Sun et al., 2024). An approach is therefore needed that focuses on “AI in the wild” (ibid.), that is, on the challenges of integrating AI tools into the complex and contingent setting of everyday work, which involves individual decisions about which genAI application to choose and implement for particular tasks from a constantly growing arsenal, learning how to use them, and evaluating their results. Such an approach will be particularly useful for understanding which human competencies are needed to complement genAI use. In this context, our study addresses how genAI affects the working practices of professionals, also offering a better understanding of how the latter define the indispensable “human factor” in their profession.

To do so, we focus on three particular aspects of knowledge work central to current debates on the use of genAI in knowledge work. First, debates revolve around which skills can be replaced by genAI, leading to constant new estimates of which jobs will be substituted by this technology. In addition, questions are raised about the new skills necessary to use genAI effectively (e.g., Korzynski et al., 2023), while concerns arise about professional skills that are not only replaced but might even be entirely forgotten as a result of reliance on automated processes (Bainbridge, 1983). These are long-standing debates in the sociology of work. Technological change was initially mainly associated with deskilling (Braverman, 1974) and tighter control over work performance (Edwards, 1980), but rising demands for flexibility and contingency in more complex arrangements create requirements for higher skills and increased independent decision-making by employees (Kern & Schumann, 1984). Requirements for contingency and flexibility have constituted a barrier to automation. However, genAI is expected to again alter the relationship between skills and automation because learning algorithms might be better equipped for task automation in contingent work situations (Autor, 2015; Huchler & Heinlein, 2024; Schulz-Schaeffer 2025).

Second, creativity has so far been understood as a characteristic of humans. Yet, the question of whether genAI also possesses creativity has become an important research question (Gilhooly, 2024; Guzik et al., 2023). Although some argue that genAI is only able to recombine given facts in a statistically most likely way (Runco, 2023), others highlight how this recombination nonetheless allows for new ways of seeing things and finding creative solutions (Haase & Hanel, 2023). This poses the question of which kinds of tasks people actually perform using genAI in their domain of knowledge work when originality or innovativeness are needed to produce results. In addition, questions arise about the authenticity of results and the need for human involvement (e.g., Brüns & Meißner, 2024). Because it matters where results come from and who is accountable for them, the substitution of human expertise by technology also confronts users with epistemological questions about the explicability of results, ethical issues about responsibility for proposed solutions, and legal questions about copyright (e.g., Vredenburgh, 2022; Samuelson, 2023; Mühlhoff, 2025).

Accordingly, we address knowledge work in terms of (1) the skills needed not only to accomplish work tasks but also to deal with new genAI tools, (2) creativity, understood as innovative and original approaches to task fulfilment, and (3) authenticity, which highlights the need for results to be explainable and reliable and for someone to take responsibility for them. Our study thus analyzes perceptions of how genAI changes knowledge work with regard to these three critical aspects.

3 Methods

We limited our investigation to genAI systems that operate based on large language models and generate written language. We selected three domains of knowledge work to investigate these issues: IT programming, science, and coaching. These domains represent relevant but contrasting fields of knowledge work, in which the application of genAI is imminent and the issues of (re)skilling, creativity, and authenticity strongly matter, albeit in different ways. These fields differ in terms of the standardization of tasks, the degree of openness regarding the definition of problems and solutions, and the possibility to check and control results. In IT programming, professionals deal with the production of code based on a standardized language, which not only delimits the modelling of problems and solutions but also facilitates control of the results. In contrast, science is characterized by very high standards for the originality of ideas and the transparency of methods, which form the basis for the truthfulness and reliability of results. Meanwhile, coaching professionals rely on flexible adaptation to individual needs, selecting methods and discussing topics based on empathy and a situational understanding of human interaction as the central aspect of their profession. Therefore, we assumed that the extent to which genAI is already integrated into knowledge work or treated more cautiously differs across these three professional settings.

To conduct our study, we applied a mixed-methods approach. The qualitative analysis consists of 14 semi-structured interviews with 16 experts from the aforementioned professional fields in Germany. These interviews lasted 60 – 90 minutes and were conducted between May 2023 and March 2024.

Table 1: Domains, interviews, and areas of expertise

|

Additional indices |

Interview |

Area of expertise |

|

Science |

Interview Science 1a |

Good scientific practice |

|

Interview Science 1b |

Plagiarism |

|

|

Interview Science 2 |

Computer scientist |

|

|

Interview Science 3 |

Funder |

|

|

Interview Science 4a |

Publisher |

|

|

Interview Science 4b |

Research analytics |

|

|

Coaching |

Interview Coaching 1 |

Coaching Association |

|

Interview Coaching 2 |

Scientist |

|

|

Interview Coaching 3 |

Coach |

|

|

IT programming |

Interview Programming 1 |

Management |

|

Interview Programming 2 |

ML engineer |

|

|

Interview Programming 3 |

GenAI service provider |

|

|

Interview Programming 4 |

Frontend |

|

|

Interview Programming 5 |

DevOps |

|

|

Interview Programming 6 |

IT Association |

|

|

Interview Programming 7 |

Frontend/full-stack |

We selected a sample of representatives of professional associations and other experts with knowledge of the general reception of genAI applications in their occupational field, as well as professionals who used genAI in their work routine and were able to report on their experiences working with these tools. With the interviews, we aimed to provide detailed insights into the reasoning of practitioners and experts in the surveyed professions as regards genAI. We asked about their usage of genAI and their perceptions of the uptake of these tools in their field, as well as how their use affected work routines and work processes. We also sought to reconstruct the current state of implementation in each field through the qualitative data.

The audio recordings of these conversations were transcribed and analyzed through qualitative content analysis using MAXQDA. Two coders worked iteratively to develop a coding system based on the three main categories of skills, creativity, and authenticity. Subcategories derived from the interview material were added inductively during the process. The results were then condensed into summaries for each category in each professional domain and subsequently analyzed comparatively.

Building on the analysis of the qualitative interviews, we designed a quantitative online survey to provide a broader perspective on the use and perceptions of genAI of a greater number of professionals. 1 To explore the perceptions of genAI’s impact across our three domains (IT programming, science, and coaching), we conducted three parallel studies in December 2023. Participants were recruited through the Prolific platform, targeting individuals from the United States and Europe who made regular, weekly use of AI technologies. To ensure relevance and expertise in each domain, we employed pre-screeners tailored to each field: in IT, we required experience in computer programming, familiarity with various programming languages, and knowledge of software development techniques; for science, we focused on academics with research functions; and for coaching, we sought individuals in consultant, coach, therapist, personal trainer, or well-being counsellor roles.

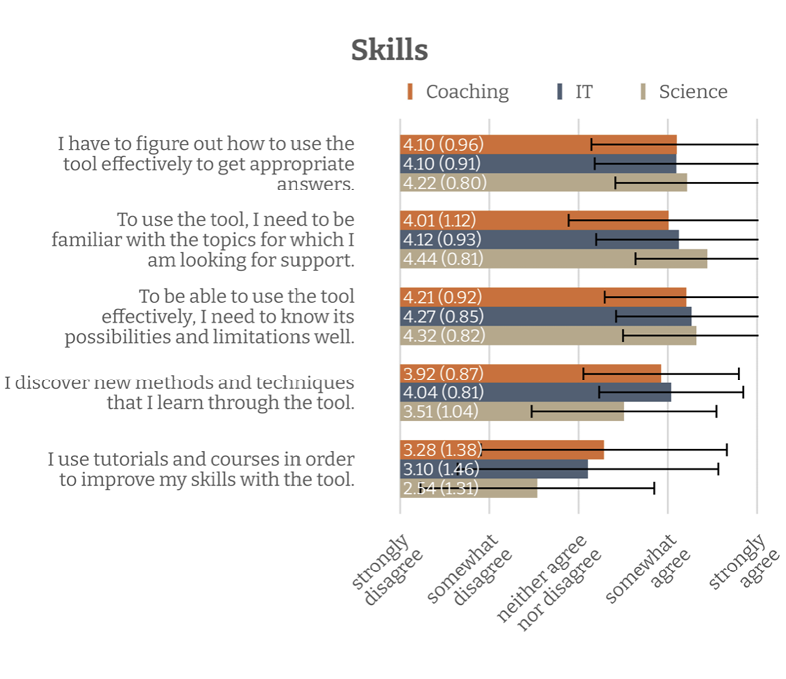

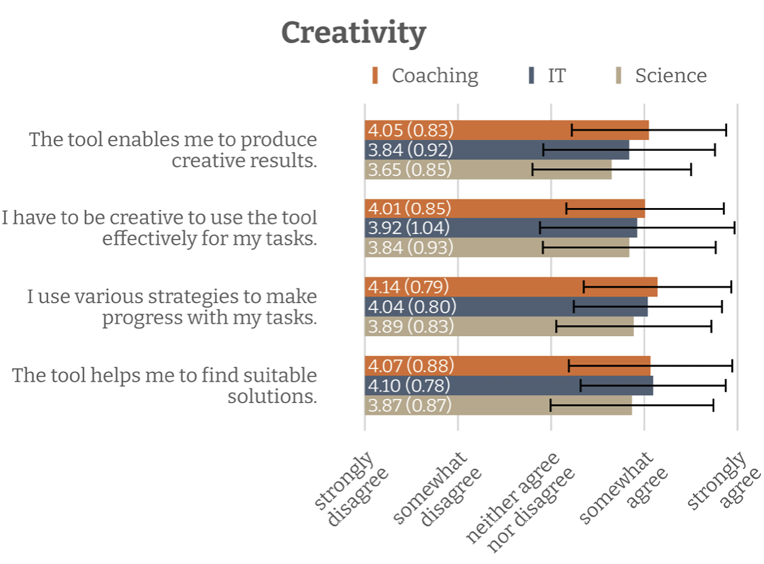

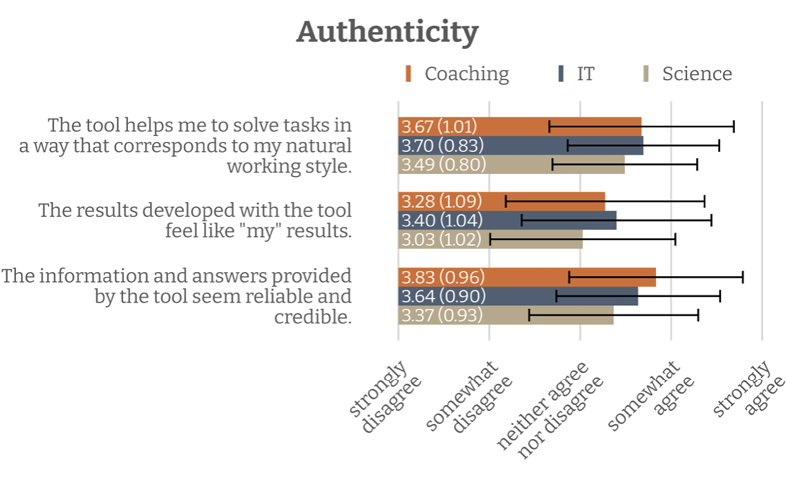

Participants came from diverse educational backgrounds, spanning all levels of education. The study aimed to assess their perceptions of the impact of ChatGPT and similar tools on skills, creativity, and authenticity in their respective fields (see Figures 1 – 3 for the precise items). The assessment created for the study used items rated on a five-point Likert scale. The results are detailed in Figures 1 – 3.

4 Findings

4.1 Generative Artificial Intelligence in the Three Domains of Knowledge Work

With this study, we set out to investigate the perceptions and use of genAI in three domains of knowledge work that are likely to be particularly exposed in different ways: IT programming, academic work in science, and coaching. IT programming is one of the pioneering fields to test and use genAI tools in professional practice (Nguyen-Duc et al., 2023; Stack Overflow, 2023). The introduction of ChatGPT and other genAI tools has generated strong interest among programmers in testing and utilizing their potential and limitations for performing various tasks in a timely manner. The implementation of genAI is expected to significantly alter work routines in programming (Zhang, 2024; Stack Overflow, 2024), but it is also in line with decades of constant change due to the emergence of new technical devices, services, and possibilities, which have so far consistently increased the demand for programmers (Felderer et al., 2021).

In science, new genAI tools are developed to support and facilitate a variety of practices, from literature reviews to the development of new research questions. The advent of genAI has sparked much debate among researchers and research managers, editors and publishers, and funders. These discussions focus on how the use of genAI might affect the quality of research and its evaluation, the publication and grant application system, and the overall organization of research (Fecher et al., 2023; Stokel-Walker & Van Noorden, 2023; van Dis et al., 2023; Bergstrom & Ruediger, 2024).

Lastly, in the evolving coaching landscape, the potential benefits of genAI are acknowledged (e.g., Graßman & Schermuly, 2021; Passmore & Woordward, 2023; Terblanche, 2024), although the overall sentiment among coaching professionals remains one of caution. This apprehension stems from the perception that while genAI tools can support some functions of coaching, they are limited when it comes to the profession’s core competencies, which are deeply rooted in human interaction, empathy, and situational understanding (Grant, 2003).

In the next section, we summarize our findings regarding the expectations of our interview partners in each profession with regard to changes in requirements for skills, creativity, and authenticity.

4.2 Skills

IT Programming

The expert interviews examined diverse aspects of changes in skill requirements brought about by the use of genAI in programming. These included the identification of areas suitable for genAI application, the skills required for its use, and the importance of human expertise in various contexts. Our interview partners emphasized that, in addition to the core function of coding, the tasks of programmers also include customer meetings, team meetings, code reviews, and the documentation of code and programs. For example, a DevOps professional, whose job was to focus on the integration of IT applications and software development, explained: “A large part of my daily work consists of only 30 – 40% coding. The rest is meetings and other technical tasks that don’t involve coding.” (Interview Programming 5)

The potential use cases for genAI are primarily in creating code and in the generation of texts and documentation for code snippets and programs. In coding, genAI was found to support routine tasks and provide suggestions for coding solutions. However, the participants in the online survey agreed that programmers must still retain a sound understanding of the underlying processes (see also Figure 1, Statements 1, 2, and 3). Similarly, our interview partners specified that this knowledge was important to avoid potential errors and bugs.

The participants also pointed out that genAI offersadvantages in documenting the coding process, providing supplementary information that explicates the content of and rationale behind code, which was previously neglected due to time constraints. The integration of genAI is therefore supposed not only to save time but also to lead to higher quality and traceability through better documentation, for example, in code reviews.

The interviews also raised the question of how the use of genAI changes learning and knowledge. Interview participants expressed concern that the experiential knowledge currently acquired as a junior programmer will be replaced by the use of genAI and that self-learning will fall by the wayside. According to them, it is essential that programmers continue to have the skills to recognize and identify problems and give instructions independently, as well as acquire and test the knowledge required to use genAI tools effectively (see Figure 1). A senior programmer stated:

Actually, we had a conversation about this, not about ChatGPT specifically, but about the quality of the code that I’m seeing over and over and over, a lot of really bad code… I know that a lot of code is generated by the chat, so it’s fine. I’m confident that if they [the junior programmers] know more about React and Frontend [specific coding practices], they will make better use of ChatGPT. The outcome will be much greater. (Interview Programming 7)

Additionally, a focus on training was seen as key to bridging the gap between human expertise and AI assistance. The same interview partner expanded:

I think the solution is to train people. I cannot ask you [as a junior programmer] to stop using ChatGPT because that’s nonsense. What I can do is teach them. If they attend a short 15-minute knowledge-building meeting per week, they’ll gain some insights. Next time they use ChatGPT, they’ll think: ‘Oh, I know this, and I know there’s a better way of doing this than the suggestion ChatGPT is giving’. (Interview Programming 7)

Science

Among science professionals, the debate on how genAI alters the social practices of conducting research in terms of the (un)learning of skills and the gain or loss of expertise centered on two crucial topics. First, our interviewees discussed what skills are needed to properly use genAI to support research work. They mentioned skills such as the critical assessment of genAI results and the need to understand how genAI works to understand its limitations:

We can now outsource a lot, delegate a lot to tools, which we must understand in terms of their functionality, just like running a factory: you also need to understand how the factory works, and you’re responsible for the final output. You also need to engage with how things work in the factory and understand it at least in broad terms. And if something gets stuck somewhere, you need to have an idea of how to fix it. (Interview Science 2)

The interview partners also highlighted skills necessary for using genAI tools, such as prompt engineering: I don’t have a set of prompts that just produce a text and that’s it... I should know how to set prompts, and it’s a fluid process.” (Interview Science 1a)

This was corroborated by our quantitative findings, which showed that science professionals appear to need a stronger sense of security about how these tools work, even though academics seem to need to learn how to use them properly and effectively less than professionals in other sectors (see Figure 1).

Interview partners also addressed the question of how to cope with the rapid development of new genAI tools, stating: “enduring this ambiguity, this speed, this flexibility, these are enormous challenges.” (Interview Science 2)

Some highlighted the necessity of constantly adapting to this development, either to use genAI or to regulate it. Accordingly, they described responsiveness and permanent flexibility as new skills for using genAI. Taking these developments and needs into account, our interview partners agreed that genAI can help with writing text and analyzing data in the research process. Yet, they also argued that perceptions of the role of genAI in science differ between disciplines:

You really have to look at it specifically by discipline… Because there are simply different traditions and conventions in how one deals with texts, how one works scientifically, that is, what it means to work lege artis. And this also affects ChatGPT because the text itself, as a medium, has a very different status in different disciplines. So, someone who writes a dissertation in the humanities using ChatGPT is doing something entirely different from generating a methods description in, let’s say, biochemistry. (Interview Science 1b)

While in the natural sciences putting results into text tended to be seen as a task that can be easily standardized and, therefore, automatized, in the humanities and social sciences, the production of text was understood as an integral part of the research process, and the produced text was considered to be the actual result. Some interviewees even feared that the use of genAI as a tool to speed up the writing process could lead to an “asymmetry” (Interview Science 1a) and dominance of disciplines that are more adaptive to integrating genAI than others. The latter might then fall behind in terms of the speed at which grant proposals or publications are produced, the dominant currency in science to obtain positive evaluations and funding.

Our interviewees also addressed the limits of genAI and its use. They discussed what kind of scientific work can be automated and which work should still be performed by human experts. Some interviewees differentiated between standard tasks in text production and data analysis and tasks that require human sense-making:

I believe that for most people, one thing that can still be assumed is the distinction between genuinely scientific content and mere text, which would be one possibility. I’ve done something, conducted a study, and I have all the results, and I’ve just written them down as bullet points. Then, I tell ChatGPT to turn them into a continuous text. And then I simply check whether these bullet points are sensibly connected. That would be one way to separate mere text work from scientific content. (Interview Science 1b)

Tasks that were regarded as necessitating human sense-making were the production of research results but also the checking, weighing, and evaluation of results. Our interview partners felt that the possibility of using genAI for these tasks was limited and depended on whether humans are able to understand and reflect on how genAI does what it does. Yet, some interviewees questioned the use of genAI even for standard tasks:

Many would say, ‘Oh, writing an abstract is a bit tedious; it takes away time from the important stuff,’ yet it is a skill that I practice by doing it. And if we outsource certain skills to AI, we will eventually lose these skills. And then the question arises of whether the ability to condense a scientific text into an accessible format is a skill that researchers should have and not outsource to AI. (Interview Science 1a)

They highlighted that even if genAI can perform standard tasks such as writing an abstract or conducting a literature review, scientists must still possess these skills to be able to judge the results of genAI. This leads to a new skill, namely, the ability to “manage” the use of genAI, which one interviewee identified as the most urgently needed:

Where we feel overwhelmed because it is so extremely fast paced, it feels like we can’t keep up... And then, with certain new features, to consider which scenarios fit. How can I even use this? And to see and identify these scenarios, simultaneously identify the risks, and then also check and manage them. This is truly a whole new world we’re diving into. (Interview Science 2)

Accordingly, managing genAI involves not only the question of who decides on which software is bought and used at a research organization but also how to handle the possibilities for genAI use. As the capabilities and use cases of genAI expand rapidly, this brings up the question of who sets the rules for good scientific practice when working with genAI.

Figure 1: Comparative assessment of the perceived impact of ChatGPT on skill enhancement in IT programming, science, and coaching

Coaching

According to our interview partners, the integration of genAI in coaching is creating a divide between traditional coaching methods and technologically enhanced practices, which are still rarely used. Hence, they could not discuss precise new skills required to use genAI tools adequately but instead differentiated between skills that may or may not be affected by genAI use. The results of our quantitative survey suggest that actual tool use is intuitive and not thought of as requiring specific skills (see Figure 1).

The participating coaches recognized the support genAI can provide in streamlining specific tasks, such as scheduling, initial assessments, and gathering basic information and standardized methods. Highly common and simple coaching issues like time management can already be outsourced to online courses enhanced by genAI (e.g., through coaching platforms such as CoachHub or BetterUp). However, this was deemed applicable only to concise topics that follow a somewhat structured approach.

While our interview partners acknowledged the potential of genAI-based coaching tools for these common issues, they did not consider genAI to be applicable to complex individual problems, for which attuned emotional support is needed. Yet, they saw another major advantage of genAI-enhanced tools in bridging the time gap until a human coach is available. Some individuals might need immediate support, but the financial or time resources may not be available to both coach and coachee. In such cases, using available digital tools was described as “better than nothing” by our interview partners. One coach even noted:

One of the promises these platforms make in their PR and advertising is ‘coaching is democratized.’ But not everything about real coaching can be democratized. Only access is democratized, sure, and that’s fine. (Interview Coaching 1a)

However, this interview partner also emphasized that although genAI may offer lower entry barriers, the service it provides remains distinct from the full depth of human-driven coaching.

Furthermore, the interviewees found genAI useful in supporting the collection and analysis of data during sessions. This application suggests a modest but significant integration of genAI into the coaching process through the provision of complementary information.

Additionally, genAI’s potential to assist in the coaching process raised important questions about the balance between technology and human intuition. Interview partners acknowledged that genAI can offer new insights but saw the critical judgment about which of these insights are truly valuable and applicable to a specific coaching context as a uniquely human skill. One coach reflected on an experience during a coaching situation:

There was a person behind this [the coachee] who always had the feeling that what they were doing wasn’t enough. So, this is a very deep-rooted belief. And this was also a borderline case, where I was almost considering whether I should even coach this... And I wonder: when such a boundary is reached, does a tool say stop? And how does the tool say that? (Interview Coaching 3)

As the interviewees argued, genAI must be applied carefully in coaching practice, and human sense-making and intuition are still irreplaceable. In short, the interviews revealed that although genAI can augment certain aspects of coaching, emotional intelligence, empathy, and the capacity for deep human connection remain central to human coaching practices. Emotional attunement, developing trust, and understanding subtle contextual cues were perceived as distinctly human attributes that genAI cannot replicate.

The interviewed coaches thus emphasized both, the skills associated with deploying genAI effectively in coaching (i.e., knowledge of the tools, an understanding of their workings, and the ability to leverage them efficiently) and a critical awareness of the technology’s limits. As the coaching profession continues to grapple with the implications of genAI, the prevailing view is one of cautious integration, where genAI’s role is confined to augmentative and supportive functions, always secondary to human insight and connection.

4.3 Creativity

IT Programming

In IT programming, the main benchmark for work results is the functionality of code in terms of its ability to meet its intended objectives (which are mostly determined by customers). Thus, creativity is not typically recognized as a core competence. However, our interview partners stressed the requirement to creatively choose adequate tools and procedures and masterthe expanding toolbox of possibilities of genAI technology. Programmers inform themselves in advance about the functions and possible uses of genAI tools through research and practical testing. This process is usually done through learning by doing, using simple tasks to evaluate the output and potential of a tool. In addition, programmers often share experiences and best practices in dealing with genAI in self-organized exchanges.

The discussion of creativity in programming focused on finding innovative and original solutions in the development process. Coding is only one part of the work, with the focus on the knowledge gained from experience and the ability to solve problems, including with genAI assistance. An interview partner pointed out:

One still needs domain-specific knowledge of the programming language to assess whether the answer is good. And in the past, Google was your friend, right? Or a search engine of your choice. Ultimately, you still have to know the same things you did before. It’s just that now you’re not just googling, but AI-ing. So, the results are the same. The question is, how fast do you get to the result? Or how precise are the results? (Interview Programming 5)

The use of genAI tools such as ChatGPT was seen by our participants as a mere extension of the existing toolset. What is new is the wide range of possibilities that they offer. For example, ChatGPT can be used to carry out searches in specific cases or to simplify and visualize different solutions. This is recognized by the participants as providing added value because it proposes new and creative solutions provided that the user possesses the relevant experiential knowledge to craft targeted prompts and work conscientiously with the output.

In this regard, the results of our quantitative study emphasized that human creativity and experiential knowledge are essential for critically evaluating the productions of genAI and using it sensibly. Human creativity takes a central role in the effective use of genAI in programming. Creativity was thus understood as originality in the use of genAI (see Figure 2, Statements 2 and 3), enabling a meaningful integration of the tool into the development process through various strategies.

Science

In science, the interview partners discussed how the notion of creativity may evolve due to the use of genAI, understanding creativity in terms of innovativeness and originality. Against this backdrop, one interview partner asked what forms the core of scientific practice. For the humanities and social sciences, the process of writing is considered a crucial element of the creative process, which cannot be substituted by genAI:

[When I say,] ‘it is really great that I have a program that takes over the tedious task of writing for me, then I have more time for research,’ that is, of course, a completely different understanding of research compared to classical text sciences, where I say that the writing itself is the research or that rewriting the text, formulating it several times or finding a formulation, is actually the research, is the scientific engagement with the subject matter. (Interview Science 1a)

Participants also reflected on the analysis of data in the social and natural sciences, which apply quantitative methods. Beyond the actual statistical calculations, data analysis was recognized as a creative process in which original thought is required to raise novel research questions, operationalize them, and discuss results innovatively. One interview partner particularly stressed the importance of human agency:

This evaluation of data records is actually something where we would like to see at least a human component somewhere... Pre-sorting is generally not seen as so problematic. And when it really comes to the evaluation, you have to be careful that you don’t quickly get into an area where I simply feed my program with data and then it spits out a few hypotheses about everything. (Interview Science 1a)

The shared opinion among interview partners was that genAI can be helpful as a sparring partner for discussing new ideas, but the production of new research questions and their methodological operationalization is unique to humans because of their capacity to meaningfully explain why something is innovative and original, beyond statistical probabilities. This attitude was also reflected in the results of our quantitative survey, with the participating science professionals appearing slightly less convinced that genAI use can improve creativity than respondents in other sectors (see Figure 2).

Creativity was seen as crucial not only to the research process but also to reviewing and evaluating papers and grant proposals. As some interview partners stated: “the peer review process itself needs to be something where there’s critical and original thinking on the part of the reviewers… I do not see that being replaced by generative AI tools.” (Interview Science 4a)

They justified this by arguing that this kind of judgement draws on the ability to critically reflect on what constitutes an original innovation, beyond a simple recombination of existing ideas. Nonetheless, the respondents expected genAI to affect the way scientific knowledge will be produced. One research analytics provider saw considerable potential for genAI tools in supporting research activities, but still recognized that these tools should be developed jointly with the users to ensure their accuracy:

The speed and scale of impact is something that will impact the process of science itself and the research community and how research findings are generated and submitted, for example, to scholarly journals in the first place… And there again, I could see continued needs for human oversight to somehow validate the output that comes from this system.(Interview Science 4b)

However, other interviewees argued that the use of genAI might not boost creativity but only increase the volume of academic output:

[AI tools] give me an ease that is almost unbearable, and you produce like a world champion. You’re super productive. But if we all do that, worldwide… we’ll have an inflationary increase in publications that nobody can read. Again, we need AI tools to be able to analyze, aggregate, and work with this. And then, we also need AI to show us the way to new, interesting areas of research. In other words, we use AI for different purposes all the time, so to speak, because the growth alone, i.e., the volume, the quantity, simply overwhelms us as humans. (Interview Science 2)

Our participants feared that the increase in publications would lead to a “vicious circle,” in which using genAI would become essential to cope with the amount of text created by genAI.

Yet, they also understood creativity as extending to how one uses genAI. As one interview partner put it: “you don’t just input a prompt and the text appears, but rather you try to refine it and experiment.” (Interview Science 1b)

Crafting the best prompts to achieve the best results is becoming a recognized aspect of the creativity needed to produce good science.

Coaching

In the field of coaching, participants regarded genAI’s ability to generate diverse ideas and perspectives as adding to the creative toolkit available to coaches. For instance, they described how AI-driven prompts can inspire novel approaches to standard coaching challenges or provide a range of options for tackling common issues such as goal-setting or motivation. Coaches appreciated genAI’s ability to generate ideas, which facilitates brainstorming and problem-solving. The results of the survey mirrored the opinions of our interview partners, with coaches reporting finding creative and suitable solutions by using ChatGPT (see Figure 2).

However, the interview partners often questioned the depth and usefulness of these AI-generated contributions. They argued that the nuanced understanding of a coachee’s experiences and emotions and the subtleties of human behavior remained distinctly human aspects that genAI could not adequately capture or replicate. As one coach explained: “This process involves so much intuition and emotional involvement. It’s about subtle perceptions, like how a client reacts to certain questions. I just can’t imagine a machine ever learning this.” (Interview Coaching 3)

Accordingly, the true essence of creativity in coaching, which is rooted in deep empathy and genuine human connection, appears beyond the reach of current genAI tools. In fact, the results of the survey indicated that coaches needed creativity to use ChatGPT effectively (see Figure 2). However, the respondents did not expect genAI to be exceptionally creative in supporting processes such as aggregating content and writing invoices but to perform accurately and reliably.

Figure 2: Comparative assessment of the perceived impact of ChatGPT on creativity in IT programming, science, and coaching

4.4 Authenticity

IT Programming

In IT programming, debates about authenticity were limited. Questions about the origin of ideas were rarely discussed, even with the advent of freely accessible genAI. This may be because taking advantage of code snippets from other sources was already common before the introduction of genAI, and genAI is perceived merely as another tool in this context. Constantly integrating new tools to improve one’s work appears to correspond to programmers’ “natural working style” (see Figure 3, Statement 1). As one developer stated: “Some developers just copy code from Stack Overflow, and this is no different from asking an AI assistant. In both cases, it’s foreign code, and the same potential for error exists.” (Interview Programming 5).

However, the question of the correct use of the tools and responsibility for the product presented to the customer sparked debate. Although the occurrence of errors and bugs in code is nothing new, the use of genAI also creates new sources of errors with which the developers are not familiar because it is not their “own code”:

When debugging one’s own code, it’s often easier to identify the problem quickly because the developer had specific intentions and thought processes while writing it. This process of reasoning is missing when working with AI-generated code. As a result, developers might not fully understand the intent behind the code, making it harder to detect and resolve bugs, or even to recognize what’s wrong when an issue arises. (Interview Programming 5).

In particular, genAI-produced code may contain passages that surpass the developer’s experience and knowledge, which can lead to an increased number of subsequent bugs, similar to untested genAI code. Typically, only senior developers have a comprehensive understanding of sources of errors, which are often more profound and only occur in rare cases. Junior developers would likely not recognize these. In this regard, some interviewees reported that senior programmers sometimes offer junior developers the opportunity of regular short meetings to boost their experiential knowledge by sharing their experience. The aim is to help them avoid errors in the future and reduce the time-consuming rework required of senior programmers. Otherwise, sources of error may not be identified due to a lack of knowledge at the junior level and insufficient time at the senior level; as a result, products may be delivered to customers with bugs. Nevertheless, the need to rework codeis also understood as part of the job: they explained that iterative revisions have always been part of the development process, even before genAI tools were introduced. Software products often require updates and optimizations over time, reflecting the evolving nature of the field and technology.

Overall, the participants primarily described genAI as support tools that may be able to take over a large part of the tasks of junior developers in the future. Even for experienced developers, they seem to remain supportive tools rather than collaborative partners that can contribute original ideas.

Science

Authenticity, understood in relation to authorship, was of central concern to all interview partners in the domain of science. On the one hand, they discussed it as a legal question of good scientific practice in producing scientific results and evaluating them. On the other hand, our interview partners debated whether genAI is a tool for assisting scientists or can even be regarded as a “collaborator” with its own ideas. The interviewees agreed that,

from an authorship perspective… essentially, these tools can help with readability, they can help with language. But there are a number of fundamental parts of the authorship process that cannot be replaced, and it still needs human oversight. (Interview Science 4a)

Therefore, genAI cannot attain the status of an author because it cannot take responsibility for the results it produces. The interview partners also agreed that the use of genAI should always be mentioned, specifying the different degrees of involvement of genAI in the research process, from summarizing existing literature to producing research questions. While some participants pointed out that not every conversation between colleagues needed to be reported, others claimed that every single use of genAI must be made transparent:

There is the strict faction that says every prompt must be documented… And then there are the more lenient people who say, ‘well, one should mention which program was used and for roughly what purpose.’ But this can then mean something like, ‘in the creation of Sections 3, 5, and 6, I used the program ChatGPT to generate an initial draft’ or something like that… In between, one can imagine there are many, many ways to regulate this somehow. (Interview Science 1b)

One person, however, cautioned against the possible effects of such transparency on potential reviewers’ perceptions:

Disclosure is important, but it should not create either a negative or positive impact. Nevertheless, we are, of course, not free from this [potential impact]; we do not know what effect it might actually have on one reviewer or another. (Interview Science 3)

Figure 3: Comparative assessment of the perceived impact of ChatGPT on authenticity in IT programming, science, and coaching

Besides responsibility, the validity and reliability of scientific results were discussed as crucial sources of legitimacy for science:

And then you encounter the black box problem, and you see these different dangers quite clearly. So why do we desire a human component in certain matters? Yes, because we consider it particularly important to avoid the unreliability of the program. (Interview Science 1b)

This topic was also reflected in the results of the survey. Respondents working in science were comparatively more cautious with regard to issues of authenticity than their peers in other fields of workwhen referring to results developed with genAI as their “own” results (see Figure 3). Especially because of their understanding of creativity and meaningful interpretation as distinctly human abilities, they saw authenticity as central to the reliability and legitimacy of scientific results and felt that it must not be called into question by the black boxed processes of genAI.

Coaching

Participating coaching professionals viewed an authentic human relationship as paramount in coaching, constituting the bedrock of the coach–coachee relationship. This was expected to involve genuine interactions, sincere emotional engagement, and the mutual trust that enables profound personal growth. Although it boosts operational efficiencies and provides answers perceived as valid (see Figure 3), the use of genAI tools in coaching generated considerable concern among the interview partners regarding the preservation of authenticity. One coach emphasized that the relevance of coaches inputs depends on authentic human judgement: “The problem with big data is that processing more data doesn’t necessarily lead to better relevance... It’s people who determine what’s relevant.” (Interview Coaching 1)

Accordingly, our interview partners argued that although genAI can process and produce content at remarkable speeds, the depth of understanding and the empathetic connection that form the essence of an authentic coaching relationship cannot be algorithmically replicated. In the interview, the participants affirmed that the authentic connection and understanding between coach and coachee remained irreplaceably human.

Moreover, the challenge of maintaining authenticity extended to the ethical use of genAI in coaching. All interviewed coaches agreed that transparency regarding the role and extent of genAI’s involvement in the coaching process has become crucial. Coaches must clearly communicate to coachees when and how genAI tools are used to ensure that the coachees understand the nature of these tools and their limitations. One interviewee stated: “As a coach, I’m responsible for knowing what happens with the coachees’ data when using such tools. It’s essential to be transparent about the ethical implications.” (Interview Coaching 2)

This transparency was thought to be essential in preserving the trust and integrity of the coaching relationship.

Despite concerns about the authenticity of the coaching relationship, some interview partners also brought up an intriguing counterpoint about accessibility and the level of comfort genAI tools may offer to some individuals. Specifically, participants recognized the unique potential of anthropomorphized chatbots and AI systems for people who find it challenging to engage in the deeply personal and vulnerable process of coaching with another human. The perceived lack of judgment and anonymity provided by genAI tools were described as lowering barriers to entry for these individuals, allowing them to take a preliminary step toward seeking help. In these instances, genAI was understood not as detracting from the authenticity of the coaching process but as enabling a form of engagement that might not have been possible otherwise.

5 Discussion

The results of our quantitative survey revealed similarities between professionals working with genAI in terms of how these tools affect skills, creativity, and authenticity in their professions, with only minor differences coming to the surface. However, our qualitative interviews pointed to important differences in the meaning assigned to these issues in each professional field. As concerns skills, our interview partners shared the opinion that routine tasks that can be standardized easily could be handled by genAI tools. Nonetheless, the extent to which they regarded tasks as standardizable differed significantly. In programming, genAI was appreciated as support for knowledge-intensive standard operations such as coding solutions and documentation; in contrast, only very limited use of genAI tools was reported in coaching, where they are regarded as usable for reducing the burden of administrative and contextual tasks but not for core coaching functions, which are largely understood as impossible to standardize and automate. In science, we found that the standardization of research tasks depended on the discipline, particularly the status of “text” as either a simple means of communication or the actual research result.

Across all domains, our interview partners also mentioned the need to acquire new skills to be able to apply genAI tools correctly and effectively for specific tasks or, especially in programming and science, to understand the results and how they are achieved. At the same time, however, participants in programming and science also expressed some fear that existing skills might be lost that would still be necessary to evaluate the results produced by genAI. In all three domains, our participants were therefore convinced that human expertise cannot be replaced but only complemented. However, the interview partners were also clear about the need to augment human expertise to deal with genAI tools.

The role attributed to creativity also varies depending on the processes of knowledge production in the three domains, which are characterized by either narrow or broad problem and solution spaces (Bouschery et al., 2023). In all domains, our interview partners agreed that a certain amount of creativity and original thinking is needed, in addition to knowledge and skills, to come up with new solutions (and new problems) with genAI tools. Yet, the creativity of genAI is judged differently based on how problems and solutions are defined in each domain. In programming, where concrete problems are addressed in a structured way, creativity is limited to the programmer using genAI tools that are not strongly characterized by creativity but can be used to explore alternative solutions. In contrast, coaching consists of situated problem-solving, and coaches are trained to find the right methods and ask the right questions in situations that arise spontaneously during interactions with the coachee. Unlike in programming, the problems to solve are fully open, and an iterative approach is required to find a suitable solution. This process is also seen as leaving little room for creativity in using genAI, besides for assistance in diagnosing problems and proposing pre-given solutions. In science, however, creativity is seen as the central prerequisite for the production of new scientific knowledge, which is understood as a constant search for innovative and original ways to solve existing problems but also define new ones. Therefore, the contribution of genAI to creativity is a matter of ongoing debate because these tools can develop new research questions, as well as propose ways to tackle them. Their actual creative potential is controversial, however. GenAI is often referred to as a sparring partner for developing new ideas because it can promote free associations, which are involved in the production of scientific knowledge. Yet, the participants argued that human expertise is still needed to ascribe meaning to these associations and transform them into actual research programs. Therefore, we assume that it depends on the openness of the addressed problems how meaningful (and creative) genAI was understood. The creativity of genAI was seen as limited to solving well-defined problems and assisting in brainstorming processes; everything else was considered to depend on human sense-making.

The starkest differences between the three domains concerned the question of authenticity. In programming, the understanding of authenticity as authorship, namely, who has produced code or owns it, was very limited, notably because of the widespread practice of integrating open-source code. Copyright issues were not discussed in our interviews. Neither was the question of whether the results produced by or with support from genAI should be considered authentic, given that it is common to use supportive tools to facilitate code writing. Meanwhile, in coaching, the authentic “human factor” was most important because the profession is seen as primarily based on human interaction. Therefore, our interview partners stressed the ethical necessity of absolute transparency when “authentic” humans are supported by genAI. In science, authenticity was also discussed as authorship in knowledge production, with a clear focus on taking responsibility for results and their reliability. The ability to reflexively explain results was seen as a prerequisite for legitimate scientific knowledge production, which only humans are able to offer.

References

Acemoglu, D., & Restrepo, P. (2018). The race between man and machine: Implications of technology for growth, factor shares, and employment. American Economic Review, 108(6), 1488 – 1542. https://doi.org/10.1257/aer.20160696

Agre, P. E. (1998). Toward a critical technical practice: Lessons learned in trying to reform AI. In G. Bowker, S. Leigh Star, L. Gasser, & W. Turner, Social Science, Technical Systems, and Cooperative Work (pp. 131 – 157). Psychology Press.

Alawida, M., Mejri, S., Mehmood, A., Chikhaoui, B., & Isaac Abiodun, O. (2023). A comprehensive study of ChatGPT: Advancements, limitations, and ethical considerations in natural language processing and cybersecurity. Information, 14(8), Article 462. https://doi.org/10.3390/info14080462

Autor, D. H. (2015). Why are there still so many jobs? The history and future of workplace automation. Journal of Economic Perspectives, 29(3), 3 – 30. https://doi.org/10.1257/jep.29.3.3

Bainbridge, L. (1983). Ironies of automation. Automatica, 19(6), 775 – 779. https://doi.org/10.1016/0005-1098(83)90046-8

Bergstrom, T., & Ruediger, D. (2024). A third transformation? Generative AI and scholarly publishing. Ithaka S+R. https://doi.org/10.18665/sr.321519

Bouschery, S. G., Blazevic, V., & Piller, F. T. (2023). Augmenting human innovation teams with artificial intelligence: Exploring transformer-based language models. Journal of Product Innovation Management, 40(2), 139 – 153. https://doi.org/10.1111/jpim.12656

Braverman, H. (1979): Labor and monopoly capital. The degradation of work in the twentieth century. Monthly Review Press.

Brüns, J. D., & Meißner, M. (2024). Do you create your content yourself? Using generative artificial intelligence for social media content creation diminishes perceived brand authenticity. Journal of Retailing and Consumer Services, 79, Article 103790. https://doi.org/10.1016/j.jretconser.2024.103790

Brynjolfsson, E., Li, D., & Raymond, L. (2023). Generative AI at work (Working Paper No. 31161). National Bureau of Economic Research. https://doi.org/10.3386/w31161

Brynjolfsson, E., & McAfee, A. (2012). Race against the machine: How the digital revolution is accelerating innovation, driving productivity, and irreversibly transforming employment and the economy. Digital Frontier Press.

Brynjolfsson, E., & McAfee, A. (2014). The second machine age: Work, progress, and prosperity in a time of brilliant technologies. W. W. Norton & Company.

Coy, W. (1994). Expertensysteme. Künstliche Intelligenz auf dem Weg zum Anwender? In G. Cyranek & W. Coy (Eds.), Die maschinelle Kunst des Denkens (pp. 153 – 165). Vieweg+Teubner Verlag. https://doi.org/10.1007/978-3-322-84925-0

Coy, W., & Bonsiepen, L. (1989). Erfahrung und Berechnung. Informatik-Fachberichte. Springer. https://doi.org/10.1007/978-3-642-75217-9

Daugherty, P. R., & Wilson, H. J. (2018). Human + machine: Reimagining work in the age of AI. Harvard Business Review Press.

Davenport, T. H. (2018). The AI advantage: How to put the artificial intelligence revolution to work. The MIT Press.

Dell’Acqua, F., McFowland, E., Mollick, E. R., Lifshitz-Assaf, H., Kellogg, K., Rajendran, S., Krayer, L., Candelon, F., & Lakhani, K. R. (2023). Navigating the jagged technological frontier: Field experimental evidence of the effects of AI on knowledge worker productivity and quality. SSRN Electronic Journal, SSRN Scholarly Paper 4573321. https://doi.org/10.2139/ssrn.4573321

Edwards, R. (1980). Contested Terrain. The transformation of the workplace in the twentieth century. Basic Books.

Fecher, B., Hebing, M., Laufer, M., Pohle, J., & Sofsky, F. (2023). Friend or foe? Exploring the implications of large language models on the science system. AI & SOCIETY, 40, 447 – 459. https://doi.org/10.1007/s00146-023-01791-1

Felderer, M., Reussner, R., & Rumpe, B. (2021). Software engineering und software-engineering–Forschung im Zeitalter der Digitalisierung. Informatik Spektrum, 44(2), 82 – 94. https://doi.org/10.1007/s00287-020-01322-y

Ford, M. (2016). Rise of the robots. Technology and the threat of a jobless future. Basic Books.

Frey, C. B., Osborne, M. A. (2017). The future of employment: How susceptible are jobs to computerization? Technological Forecasting and Social Change, 114, 254 – 280. https://doi.org/10.1016/j.techfore.2016.08.019

Gilhooly, K. (2024). AI vs humans in the AUT: Simulations to LLMs. Journal of Creativity, 34(1), Article 100071. https://doi.org/10.1016/j.yjoc.2023.100071

Grant, A. M. (2003). The impact of life coaching on goal attainment, metacognition and mental health. Social Behavior and Personality: An International Journal, 31(3), 253 – 263. https://doi.org/10.2224/sbp.2003.31.3.253

Graßmann, C., & Schermuly, C. C. (2021). Coaching with artificial intelligence: Concepts and capabilities. Human Resource Development Review, 20(1), 106 – 126. https://doi.org/10.1177/1534484320982891

Guzik, E. E., Byrge, C., & Gilde, C. (2023). The originality of machines: AI takes the Torrance Test. Journal of Creativity, 33(3), Article 100065. https://doi.org/10.1016/j.yjoc.2023.100065

Haase, J., & Hanel, P. H. P. (2023). Artificial muses: Generative artificial intelligence chatbots have risen to human-level creativity. Journal of Creativity, 33(3), Article 100066. https://doi.org/10.1016/j.yjoc.2023.100066

Hatzius, J., Briggs, J., Kodnani, D., & Pierdomenico, G. (2023). The potentially large effects of artificial intelligence on economic growth. Goldman Sachs. https://www.gspublishing.com/content/research/en/reports/2023/03/27/d64e052b-0f6e-45d7-967b-d7be35fabd16.html

Heinlein, M., & Huchler, N. (2023). Artificial intelligence in the practice of work: A new way of standardising or a means to maintain complexity? Work Organisation, Labour & Globalisation, 17, 34 – 60. https://doi.org/10.13169/workorgalaboglob.17.1.0034

Kern, H. & Schumann, M. (1986). Das Ende der Arbeitsteilung. Rationalisierung in der industriellen Produktion. CH Beck.

Kling, R. (1991). Computerization and social transformations. Science, Technology, & Human Values, 16(3), 342 – 367. https://doi.org/10.1177/016224399101600304

Kling, R. (2002). Critical Professional discourses about information and communications technologies and social life in the U.S. In K. Brunnstein & J. Berleur (Eds.), human choice and computers: Issues of choice and quality of life in the information society (pp. 1 – 20). Springer US. https://doi.org/10.1007/978-0-387-35609-9_1

Korkmaz, A., Aktürk, C., & Talan, T. (2023). Analyzing the user’s sentiments of ChatGPT using Twitter data. Iraqi Journal for Computer Science and Mathematics, 4(2), 202 – 214. https://doi.org/10.52866/ijcsm.2023.02.02.018

Korzynski, P., Mazurek, G., Altmann, A., Ejdys, J., Kazlauskaite, R., Paliszkiewicz, J., Wach, K., & Ziemba, E. (2023). Generative artificial intelligence as a new context for management theories: Analysis of ChatGPT. Central European Management Journal, 31(1), 3 – 13. https://doi.org/10.1108/CEMJ-02-2023-0091

Liu, Z., Tang, Y., Luo, X., Zhou, Y., & Zhang, L. F. (2024). No need to lift a finger anymore? Assessing the quality of code generation by ChatGPT. IEEE Transactions on Software Engineering, 50(6), 1548 – 1584. https://doi.org/10.1109/TSE.2024.3392499

Mei, Q., Xie, Y., Yuan, W., & Jackson, M. O. (2024). A Turing test of whether AI chatbots are behaviorally similar to humans. Proceedings of the National Academy of Sciences, 121(9), Article e2313925121. https://doi.org/10.1073/pnas.2313925121

Mowshowitz, A. (1981). On approaches to the study of social issues in computing. Communications of the ACM, 24(3), 146 – 155. https://doi.org/10.1145/358568.358592

Mühlhoff, R. (2025). The Ethics of AI: Power, Critique, Responsibility. Bristol University Press. https://doi.org/10.51952/9781529249262.

Nguyen-Duc, A., Cabrero-Daniel, B., Arora, C., Przybylek, A., Khanna, D., Herda, T., Rafiq, U., Melegati, J., Guerra, E., Kemell, K.-K., Saari, M., Zhang, Z., Le, H., Quan, T., & Abrahamsson, P. (2023). Generative artificial intelligence for software engineering: A research agenda (SSRN Scholarly Paper No. 4622517). Social Science Research Network. https://doi.org/10.2139/ssrn.4622517

Noy, S., & Zhang, W. (2023). Experimental evidence on the productivity effects of generative artificial intelligence. Science, 381(6654), 187 – 192. https://doi.org/10.1126/science.adh2586

Passmore, J., & Woodward, W. (2023). Coaching education: Wake up to the new digital and AI coaching revolution! International Coaching Psychology Review, 18(1), 58 – 72. https://doi.org/10.53841/bpsicpr.2023.18.1.58

Ramge, T. (2020). Augmented intelligence: Wie wir mit Daten und KI besser entscheiden. Reclam.

Runco, M. A. (2023). AI can only produce artificial creativity. Journal of Creativity, 33(3), Article 100063. https://doi.org/10.1016/j.yjoc.2023.100063

Samuelson, P. (2023). Generative AI meets copyright. Science, 381(6654), 158 – 161. https://doi.org/10.1126/science.adi0656

Stack Overflow. (2023). Developer Survey 2023. https://survey.stackoverflow.co/2023/#ai

Stack Overflow. (2024). Developer Survey 2024. https://survey.stackoverflow.co/2024/ai

Stokel-Walker, C., & Van Noorden, R. (2023). What ChatGPT and generative AI mean for science. Nature, 614(7947), 214 – 216. https://doi.org/10.1038/d41586-023-00340-6

Taecharungroj, V. (2023). “What can ChatGPT do?” Analyzing Early reactions to the innovative AI chatbot on Twitter. Big Data and Cognitive Computing, 7(1), Article 35. https://doi.org/10.3390/bdcc7010035

Terblanche, N. H. D. (2024). Artificial intelligence (AI) coaching: Redefining people development and organizational performance. The Journal of Applied Behavioral Science, 60(4), 631 – 638. https://doi.org/10.1177/00218863241283919

Van Dis, E. A. M., Bollen, J., Zuidema, W., Van Rooij, R., & Bockting, C. L. (2023). ChatGPT: Five priorities for research. Nature, 614(7947), 224 – 226. https://doi.org/10.1038/d41586-023-00288-7

Vredenburgh, K. (2022). The right to explanation. Journal of Political Philosophy 30(2), 209 – 229. https://doi.org/10.1111/jopp.12262.

Weizenbaum, J. (1976). Computer power and human reason: From judgment to calculation. W. H. Freeman and Company.

Zhang, Q. (2024). The role of artificial intelligence in modern software engineering. Applied and Computational Engineering, 97, 18 – 23. https://doi.org/10.54254/2755-2721/97/20241339

Date received: 14 February 2025

Date accepted: 4 September 2025

1 Participants in all surveys were compensated at a rate of £12 per hour. Initially, 385 individuals were recruited. However, participants who reported not using ChatGPT actively were excluded to maintain a focus on individuals with current and relevant experiences with genAI. This criterion led to a final participant pool distributed across the three domains as follows: 105 in IT, with 25.7% female participants and a mean age of 31.32 years (SD = 10.35); 106 in science, with 54.7% female participants and a mean age of 30.15 years (SD = 5.79); and 120 in coaching, with 46.7% female participants and a mean age of 34.26 years (SD = 10.22).

Metrics

Downloads

Published

Issue

Section

License

Copyright (c) 2025 Florian Butollo, Jennifer Haase, Ann-Kathrin Katzinski, Anne K. Krüger (Author)

This work is licensed under a Creative Commons Attribution 4.0 International License.